Stock Analyzer Platform

What It Is

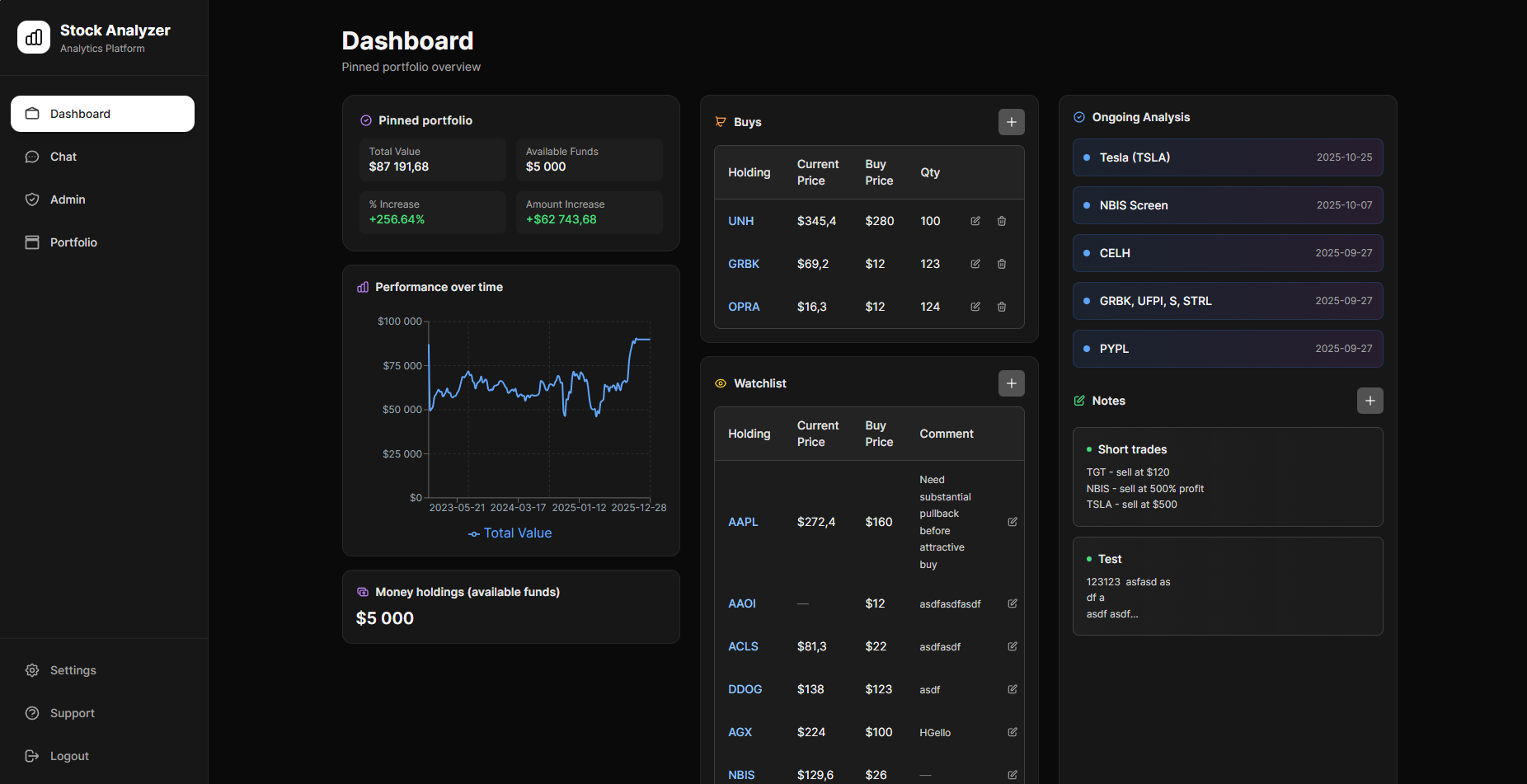

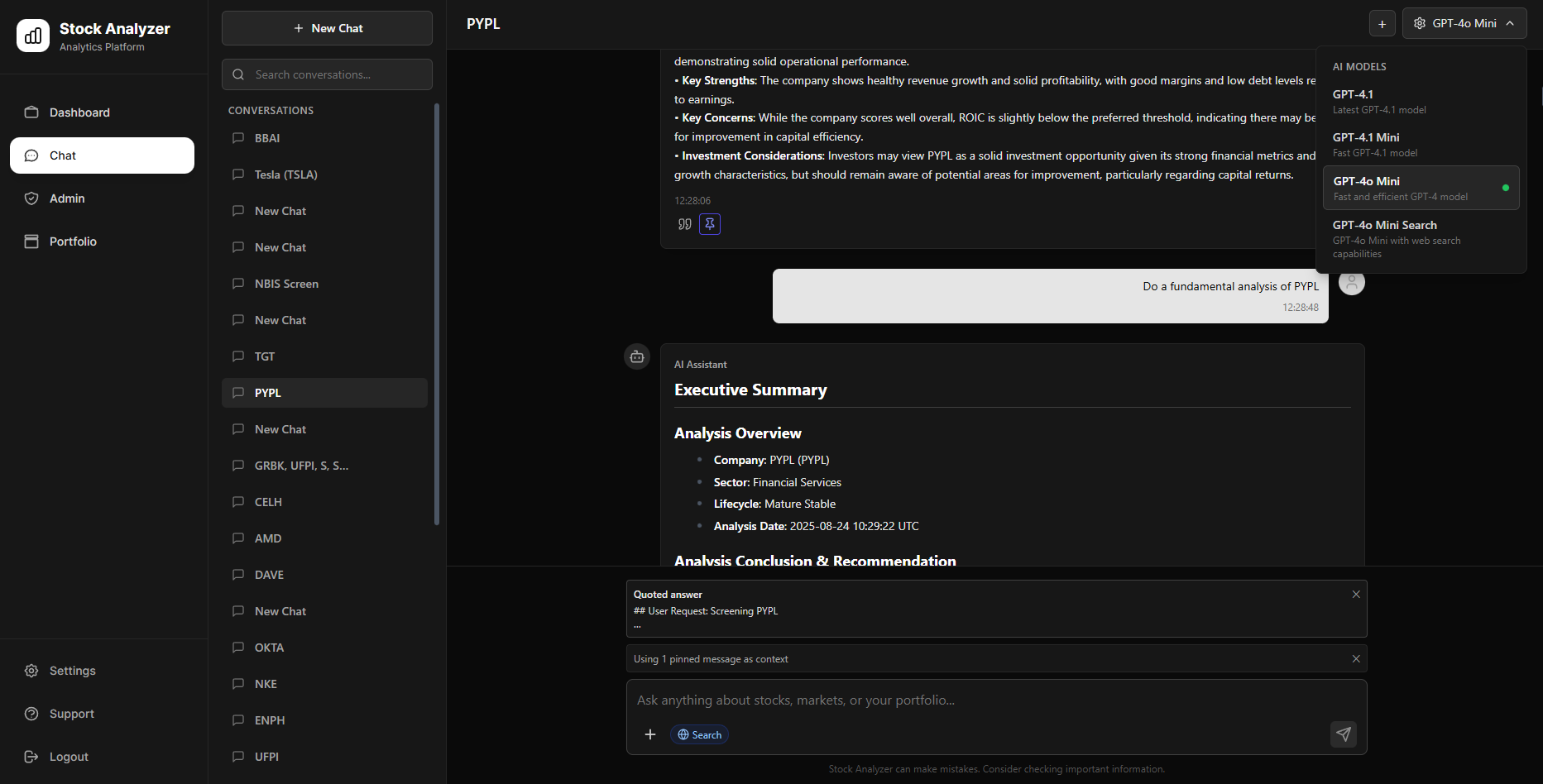

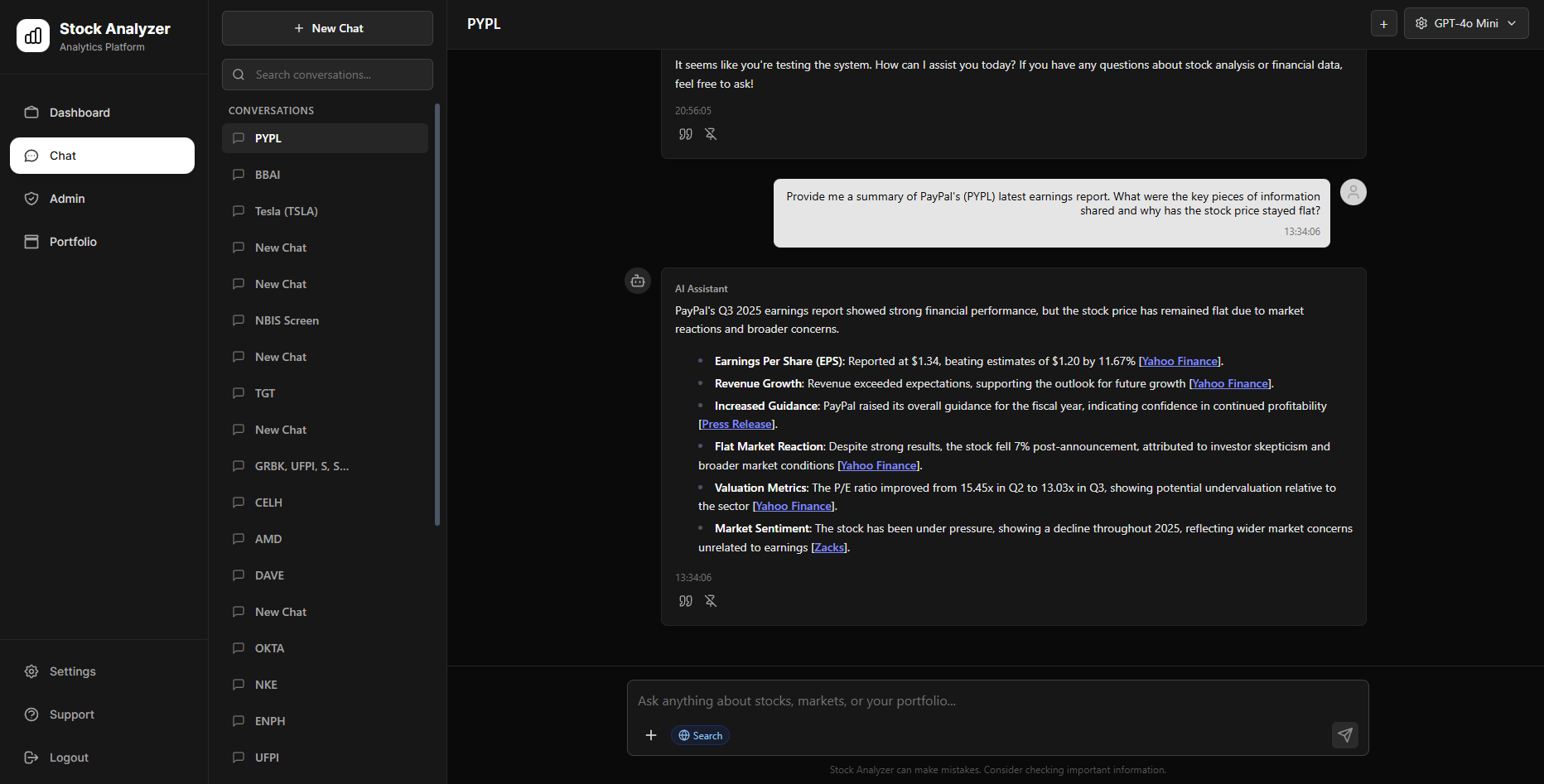

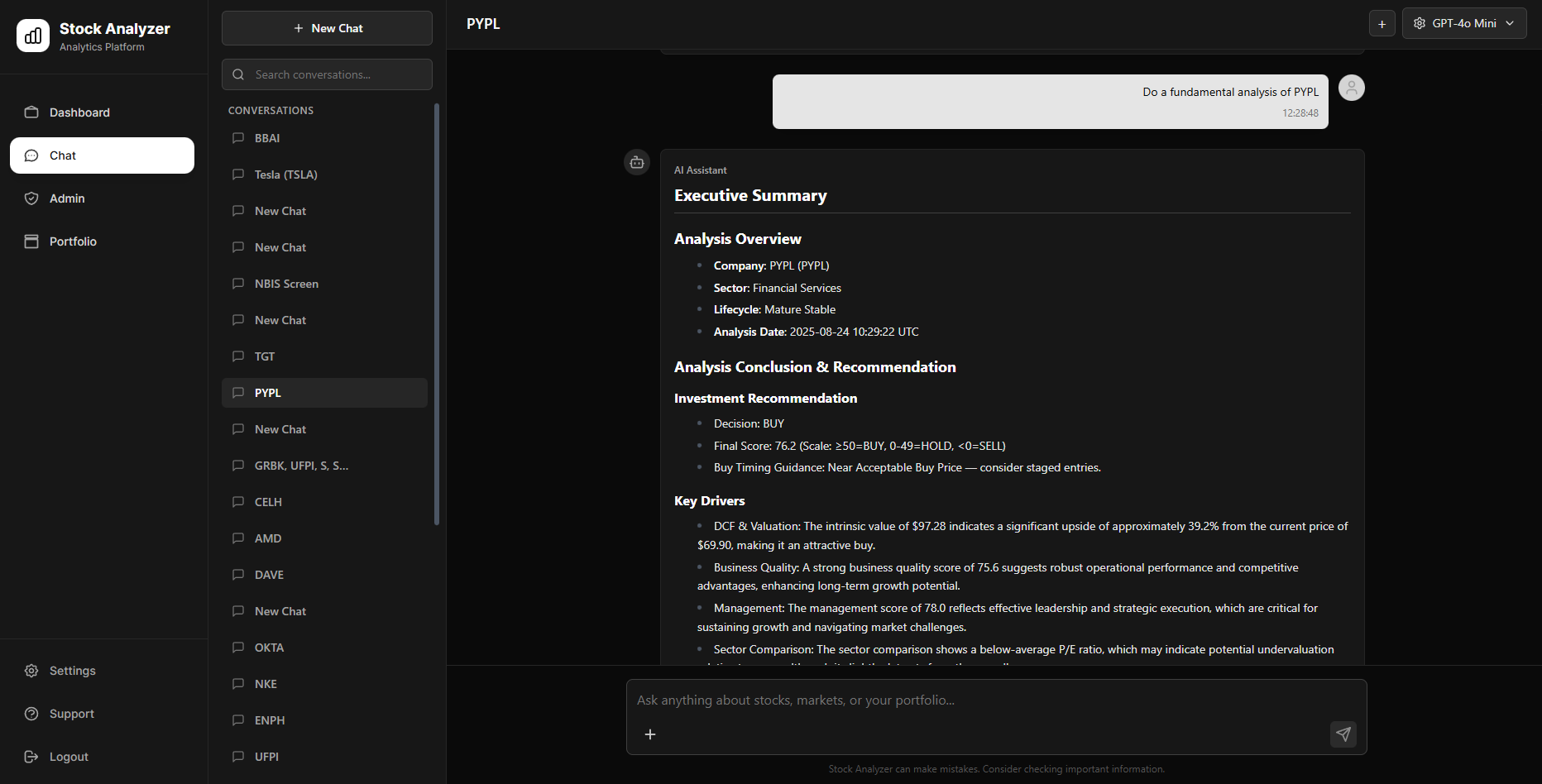

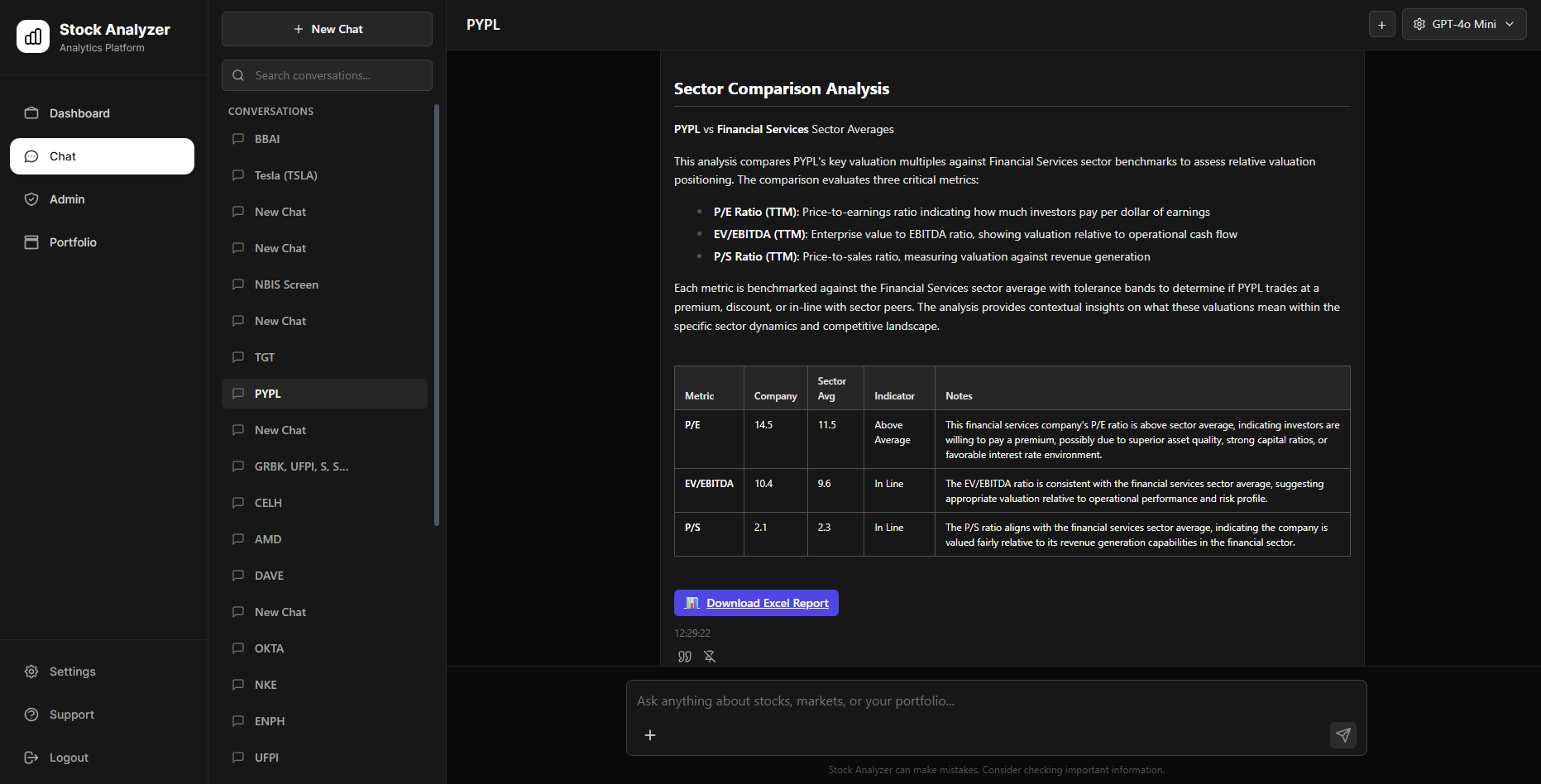

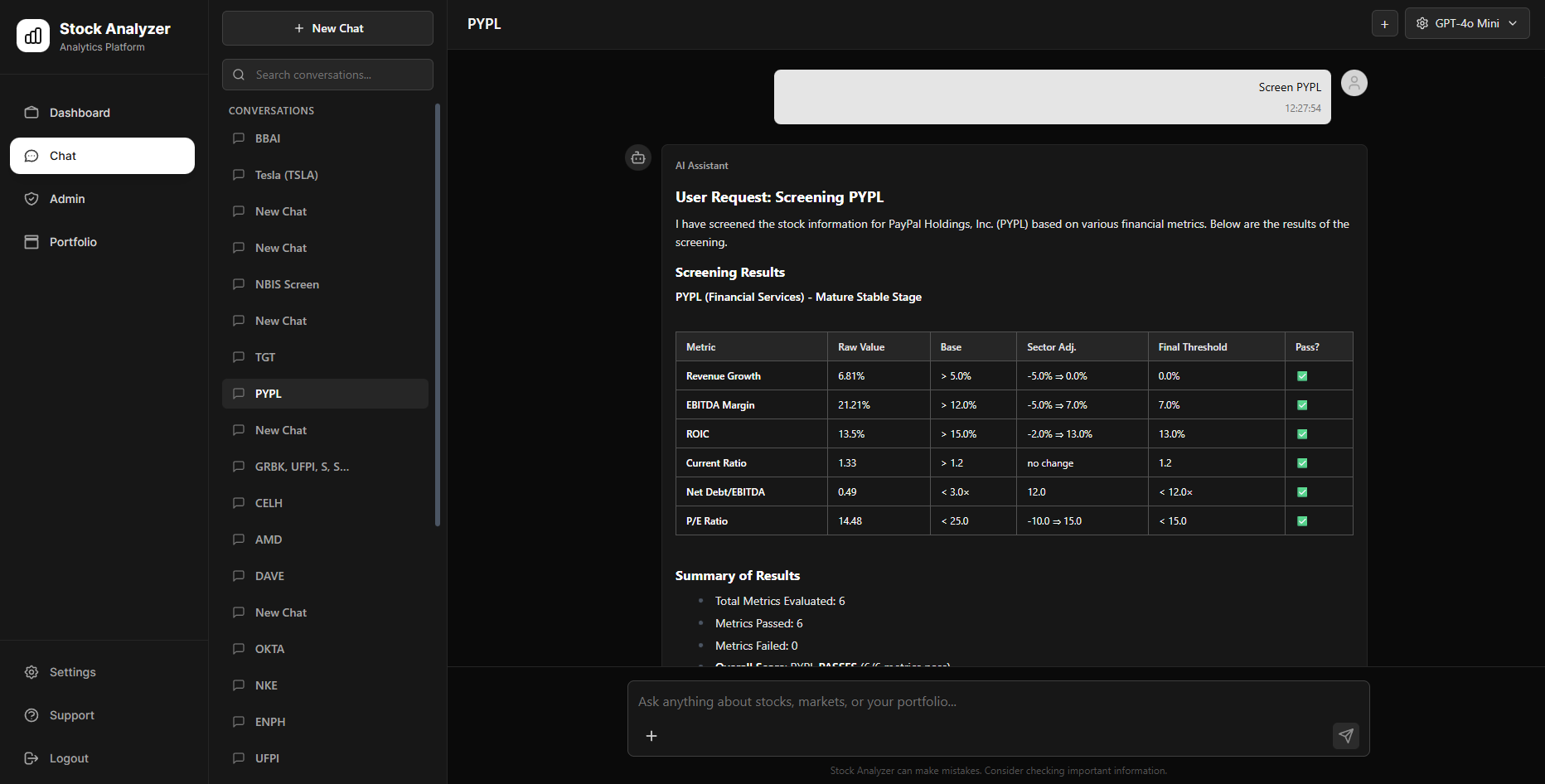

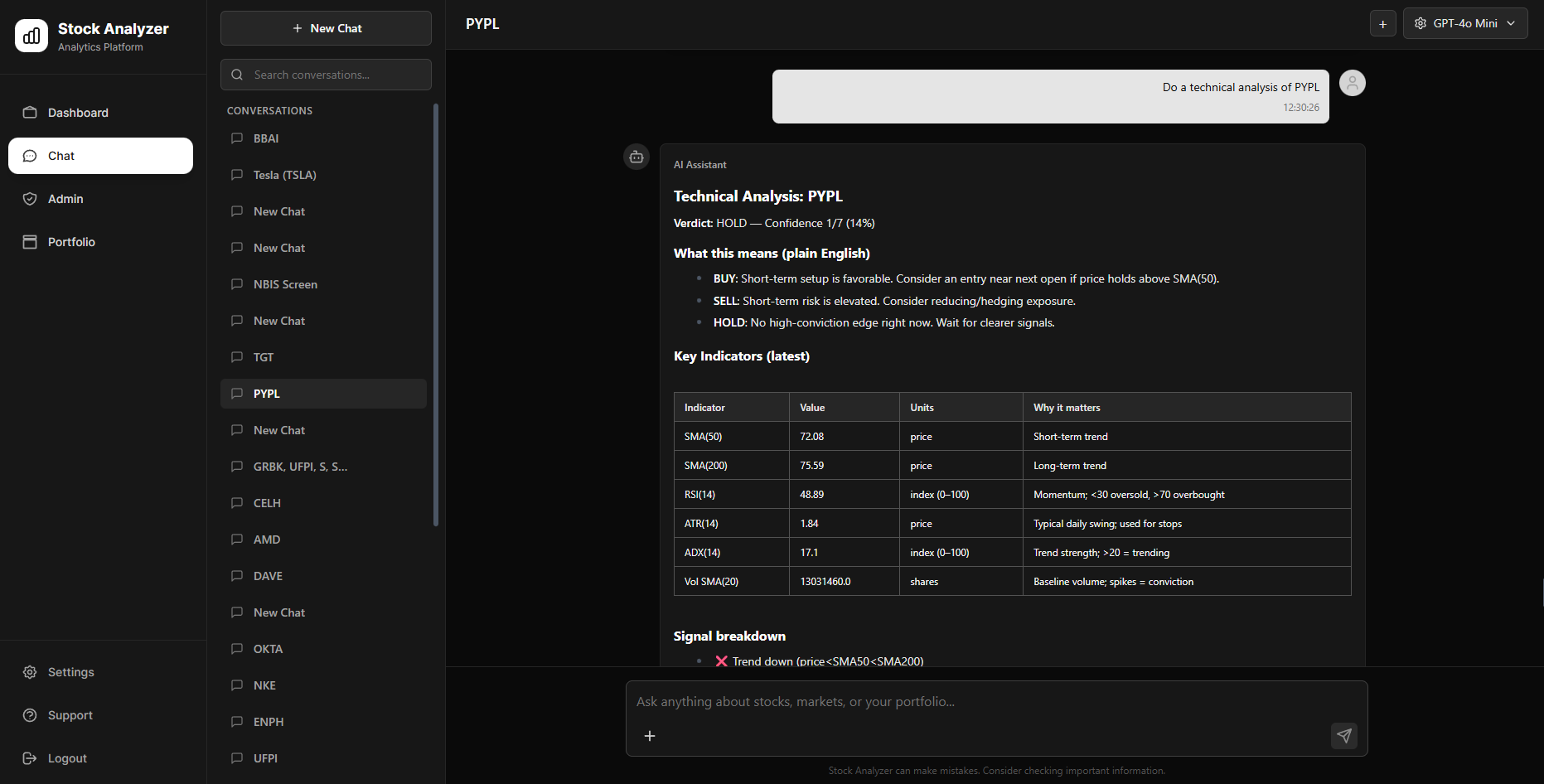

This stock analyzer platform is my most ambitious coding project to date. It is a full-stack financial analysis system that combines real-time market data, financial modeling, technical analysis, and an AI-driven chat interface into a single workflow. Users can screen stocks using company lifecycle- and sector-aware criteria, perform fundamental analysis including DCF valuation and business quality assessment, and run technical analysis with actionable trade recommendations.

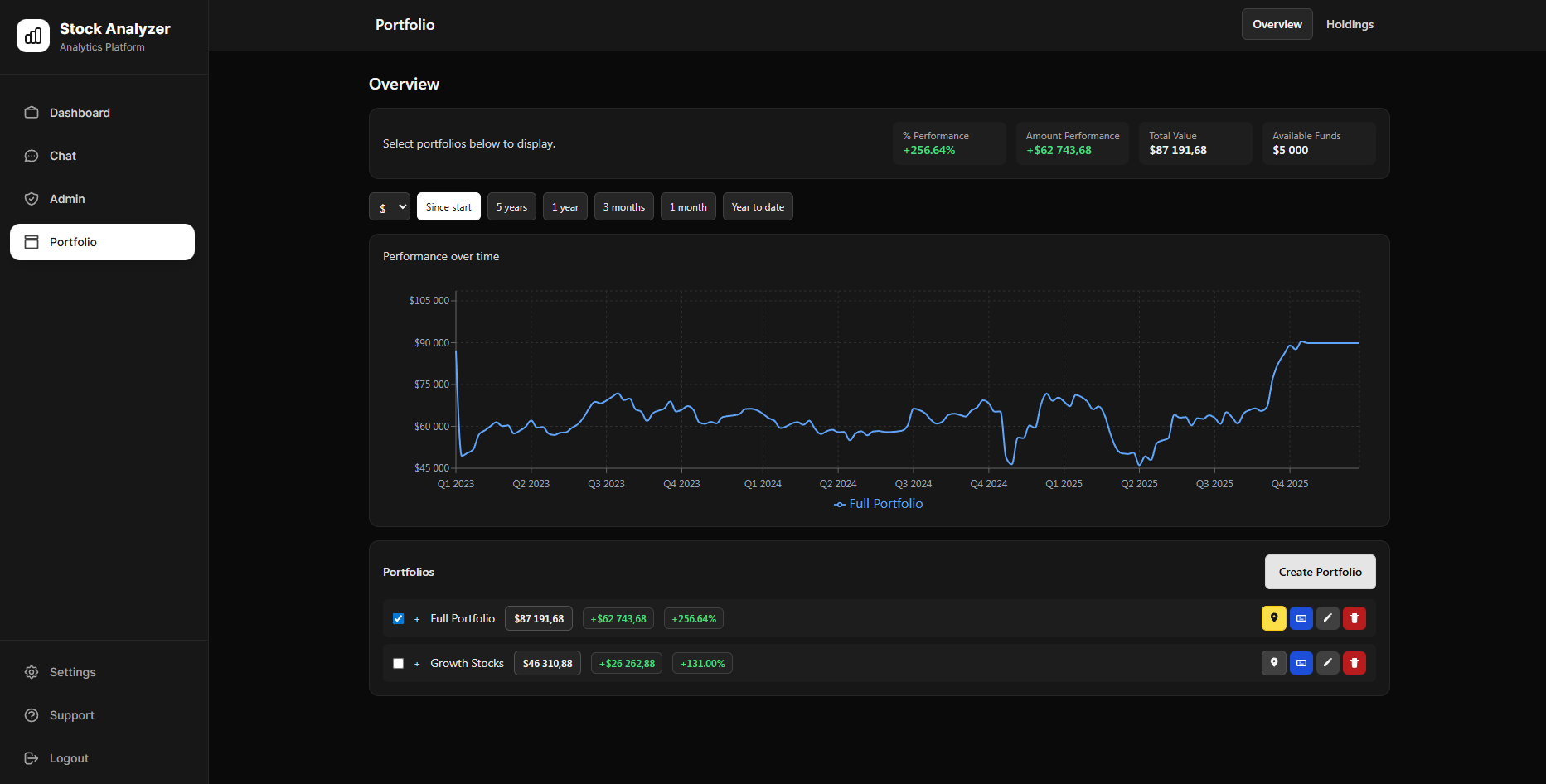

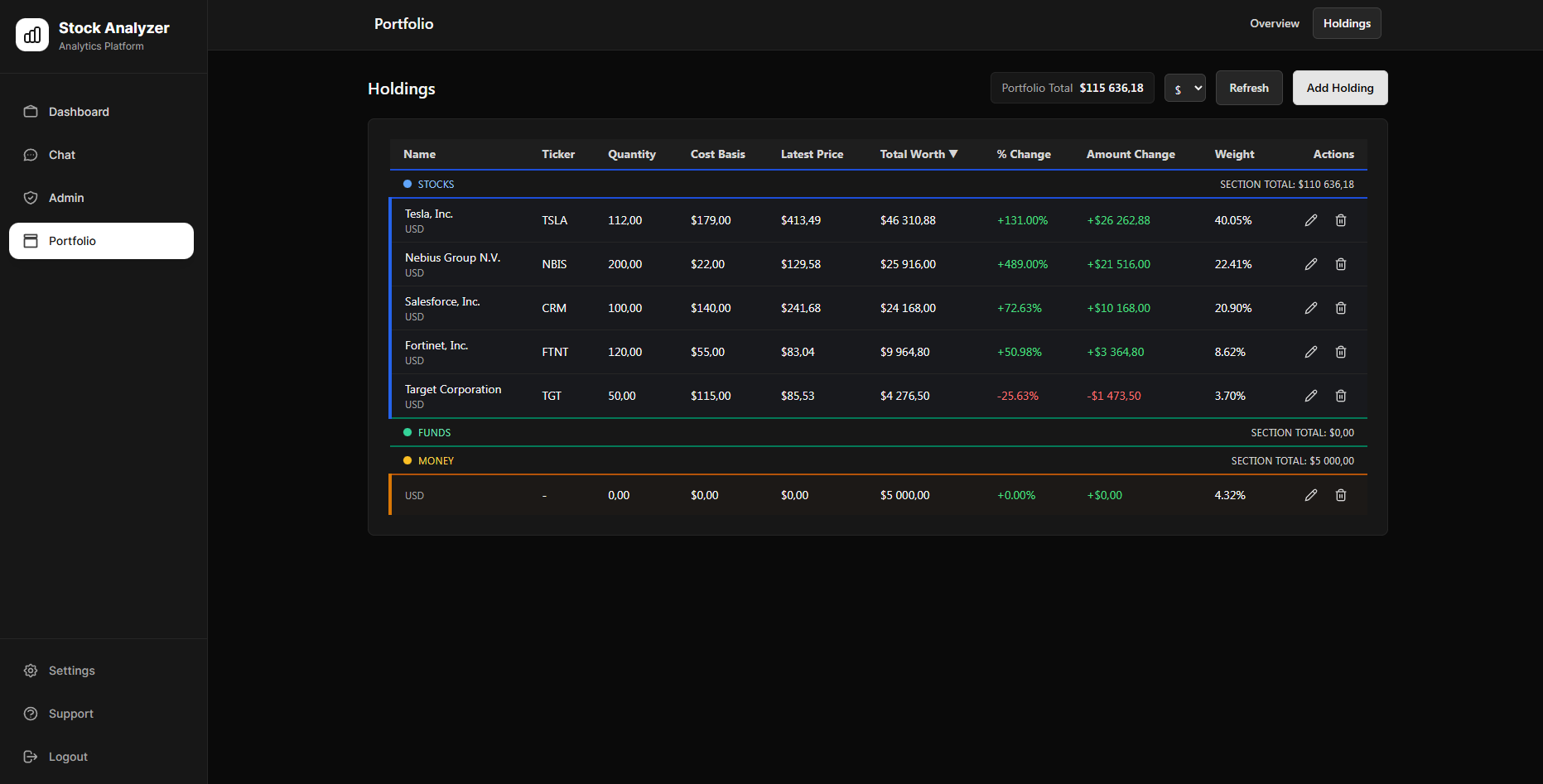

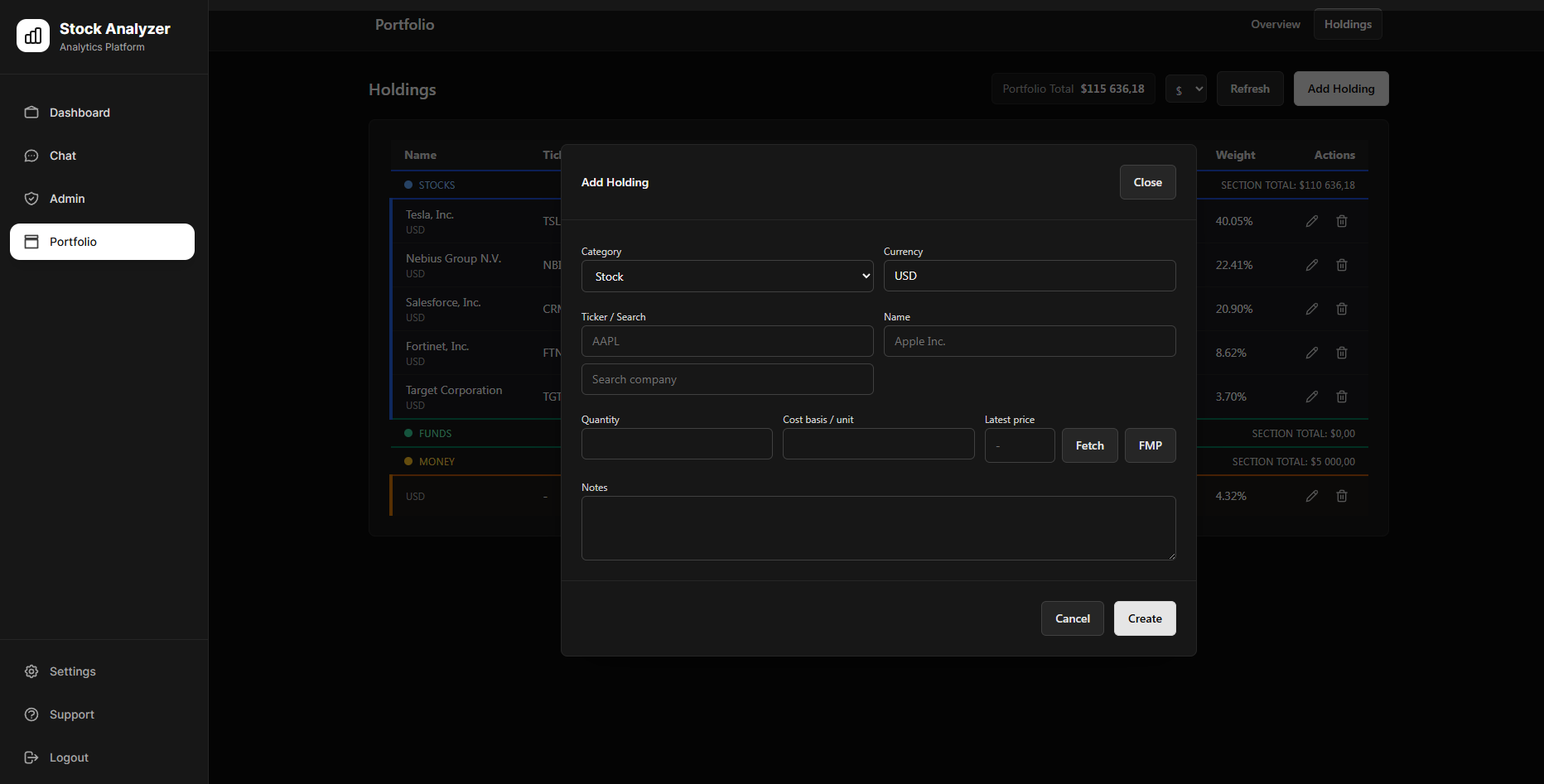

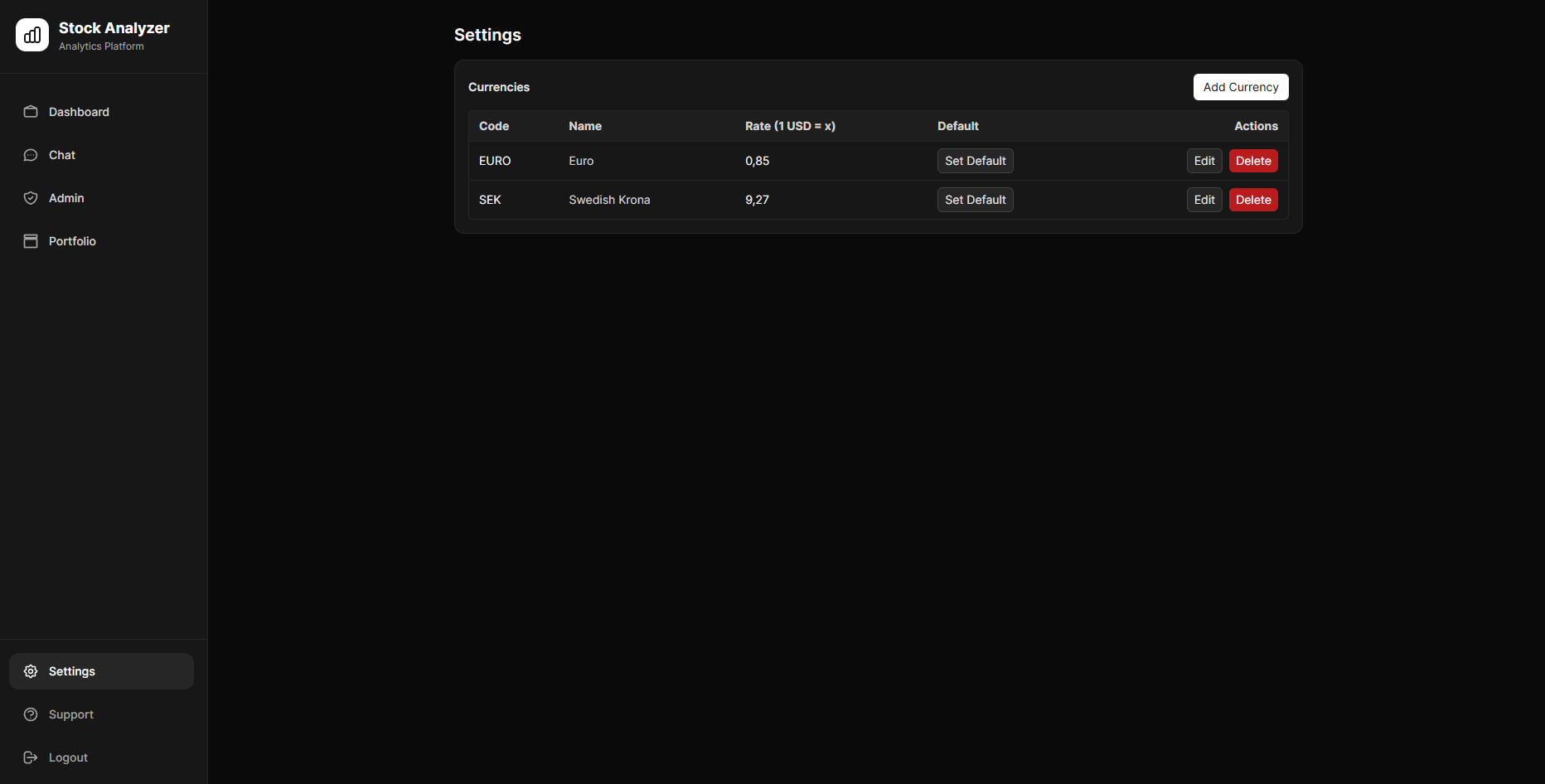

A conversational AI layer orchestrates multi-step analysis through natural language while maintaining context across long research sessions. The platform also includes portfolio tracking, a unified dashboard for ongoing analysis and notes, access to over 130+ financial metrics, and professional Excel report generation. It is built on a microservices architecture and this project reflects an attempt to combine solid financial logic, modern backend architecture, and practical AI integration into a single, usable system.

Why I Built It

Investing in financial assets, primarily stocks, has been a long-term interest of mine that gradually evolved into a serious passion. Over the years, I developed my own valuation models and analysis frameworks, most of them built in Excel. As these tools grew more complex, the process became slow, manual, and increasingly difficult to maintain. This eventually pushed me to rethink the entire workflow: automating data collection and calculations, centralizing portfolio holdings and potential investments, and integrating AI directly into the analysis process to challenge and refine investment theses.

At the same time, I wanted to explore AI as a full-time pair programmer while building a large-scale system. Combining my financial background with AI-assisted development using Cursor and GitHub Copilot, I started the project in summer 2025 and iterated on it whenever time allowed, reaching a production-ready platform by November 2025 that I now use almost daily.

How It Works

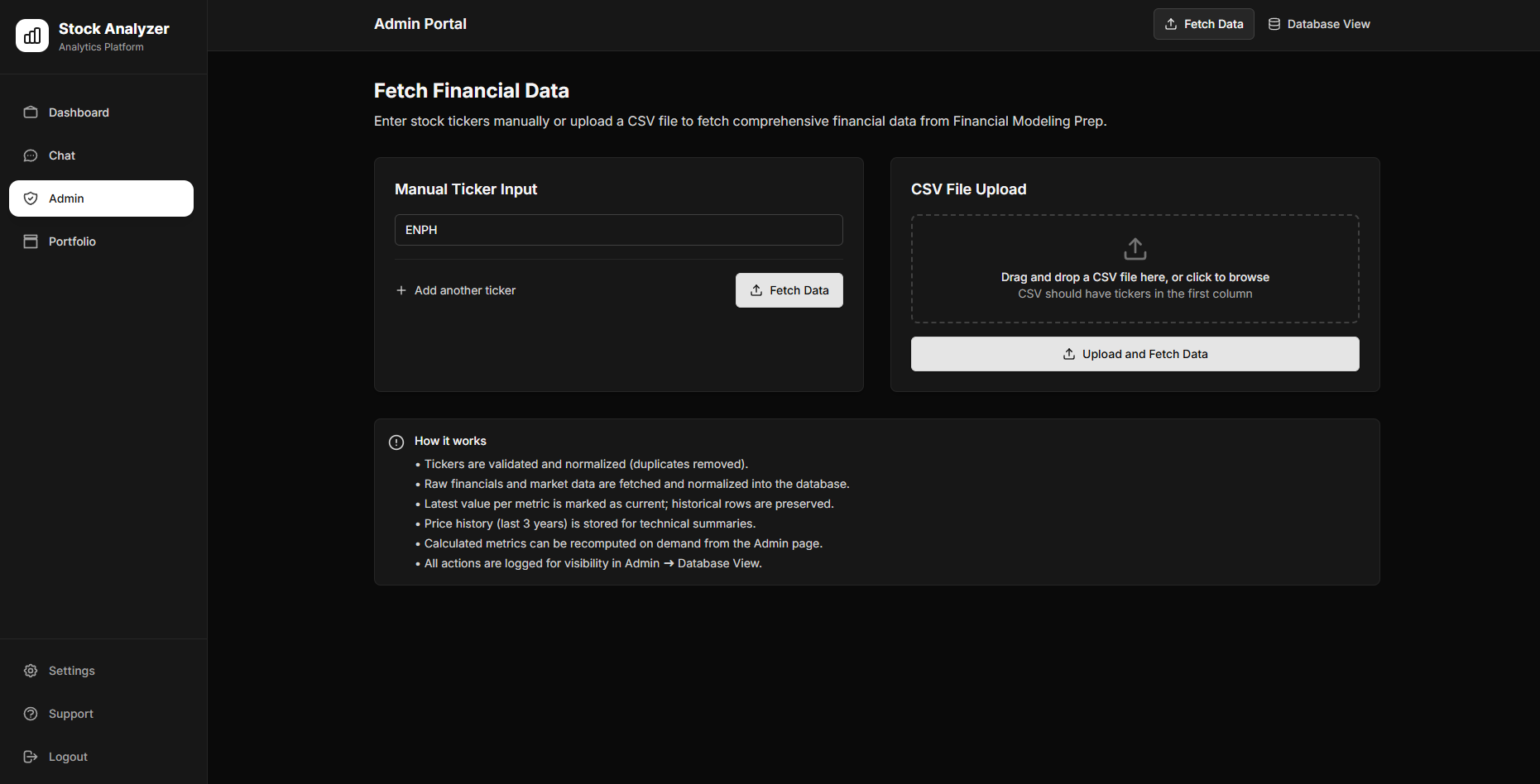

The system is built as a set of loosely coupled microservices, each responsible for a distinct part of the analysis pipeline. A React-based frontend (dashboard, chat, portfolio, admin) provides the user interface, while a central Chat Service acts as the orchestration layer. User requests enter through the chat interface and are interpreted using an LLM with function calling. The Chat Service maps intent to concrete service actions via a tool registry, coordinates execution, streams results in real time, and maintains conversational context across long sessions. All analysis is driven by a dedicated Data Service that asynchronously retrieves financial data from external APIs (FMP and Yahoo Finance), normalizes and validates it, and persists over 100 metrics in PostgreSQL. A dependency-aware calculation engine derives secondary metrics (e.g. WACC, Z-Score, growth rates, margins) while enforcing consistent units and precision.

Specialized services consume this data:

- The Screener Service applies lifecycle-aware and sector-adjusted thresholds to identify candidates and generate structured screening reports.

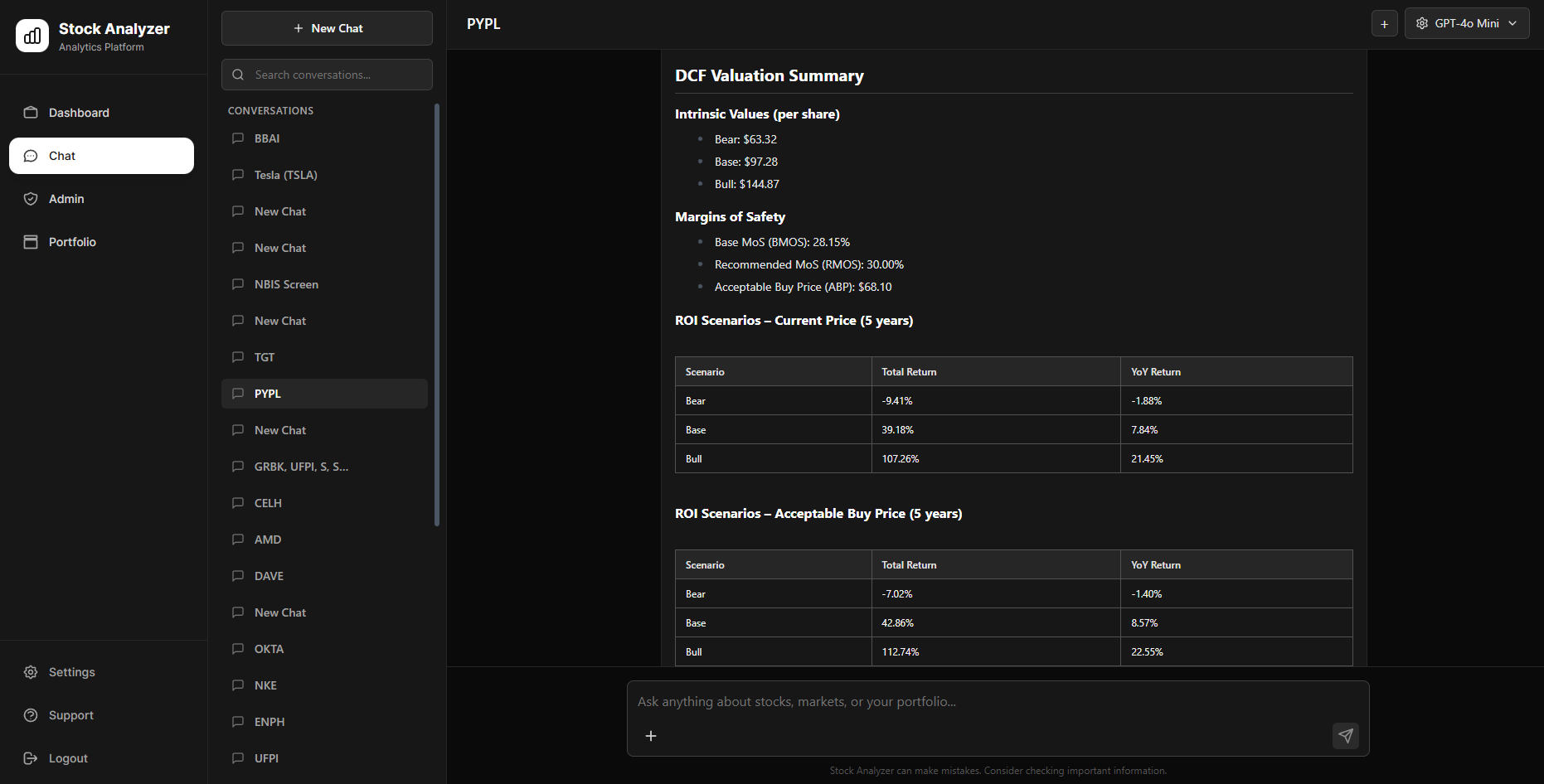

- The Fundamentals Service performs intrinsic valuation using a DCF engine with bear, base, and bull scenarios, computes Piotroski F-Scores, and evaluates business quality using multi-metric trend analysis.

- The Technical Service processes OHLCV data, analyzes price action, momentum, volatility, and volume to produce trade setups, risk-based position sizing, and interactive charts.

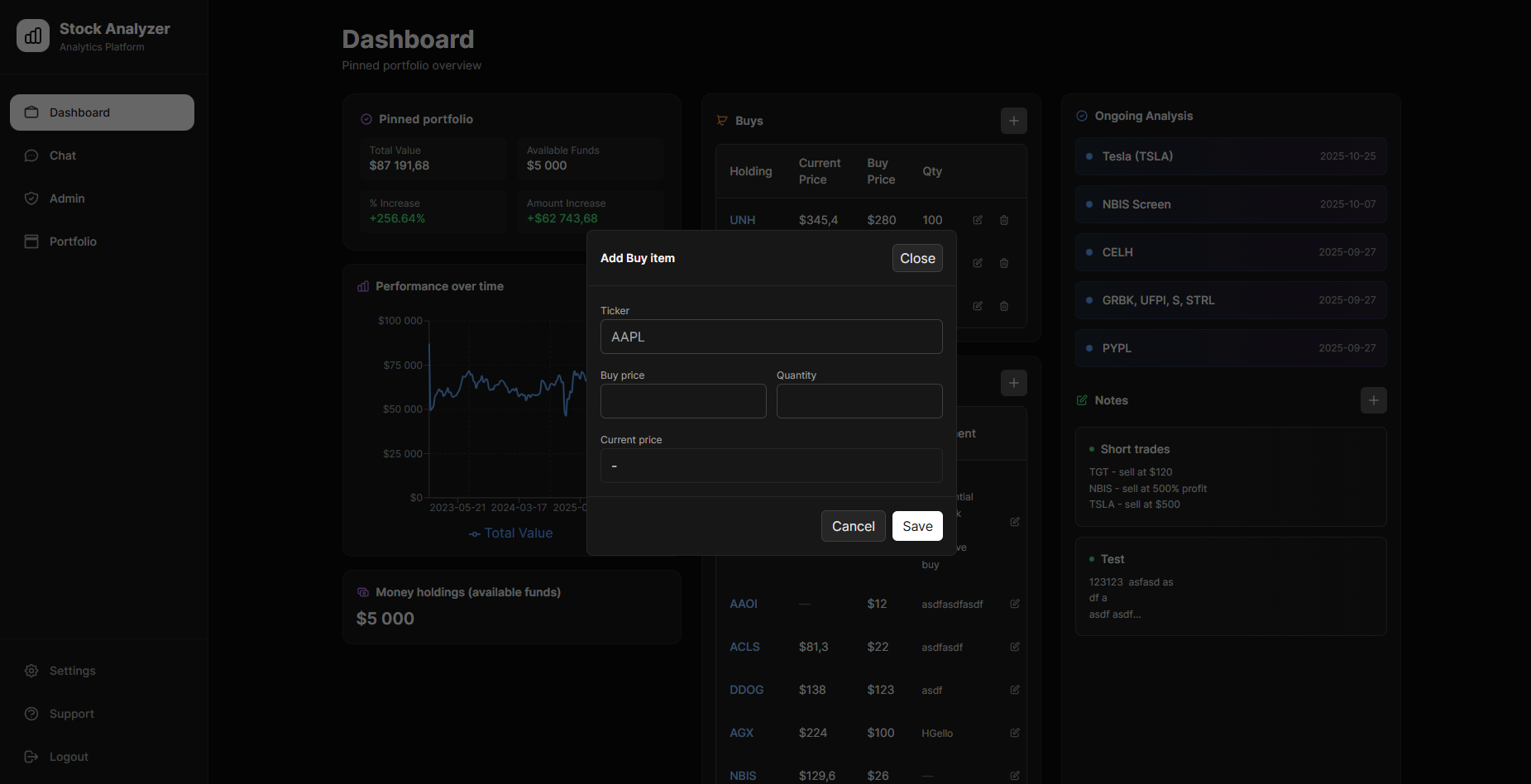

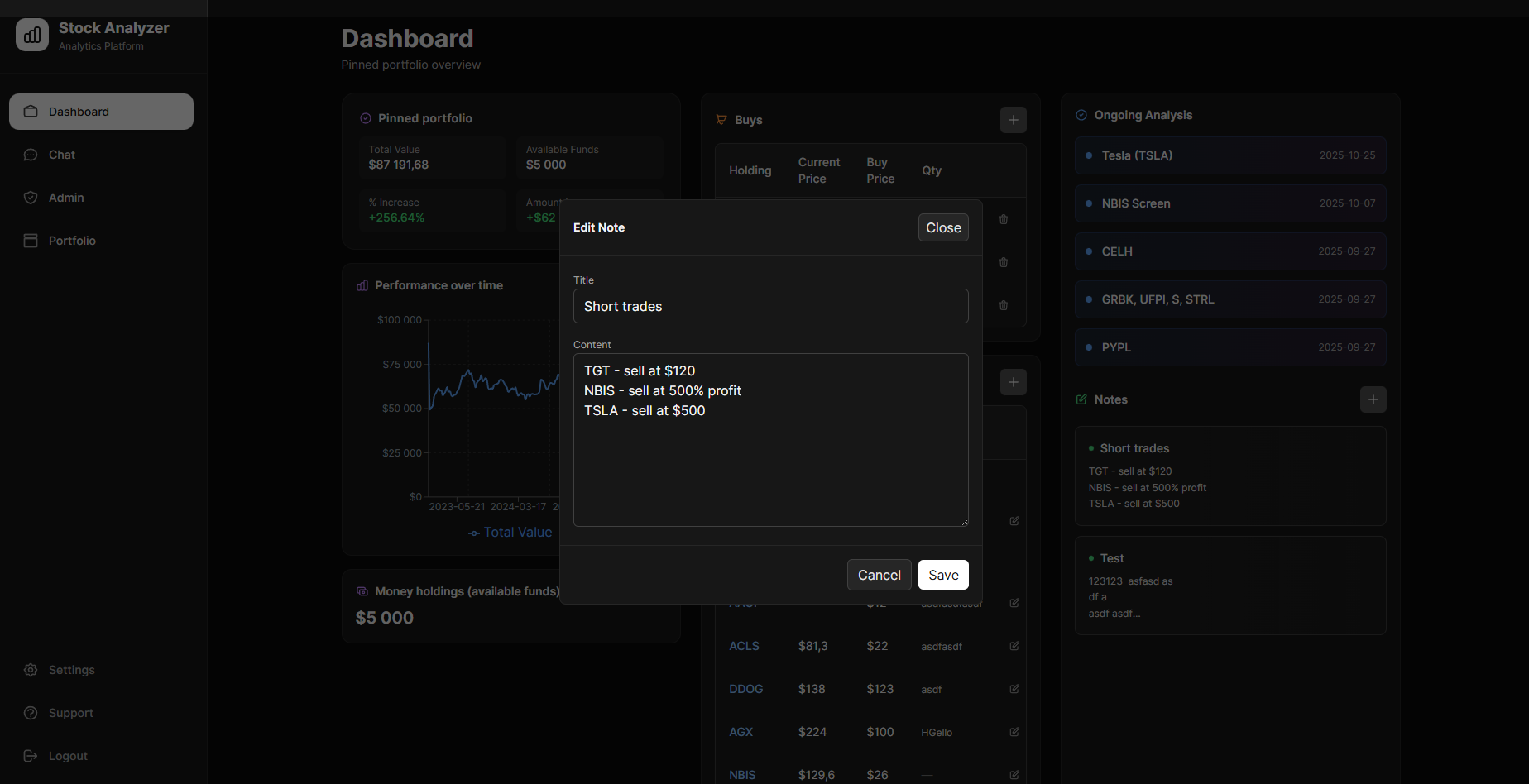

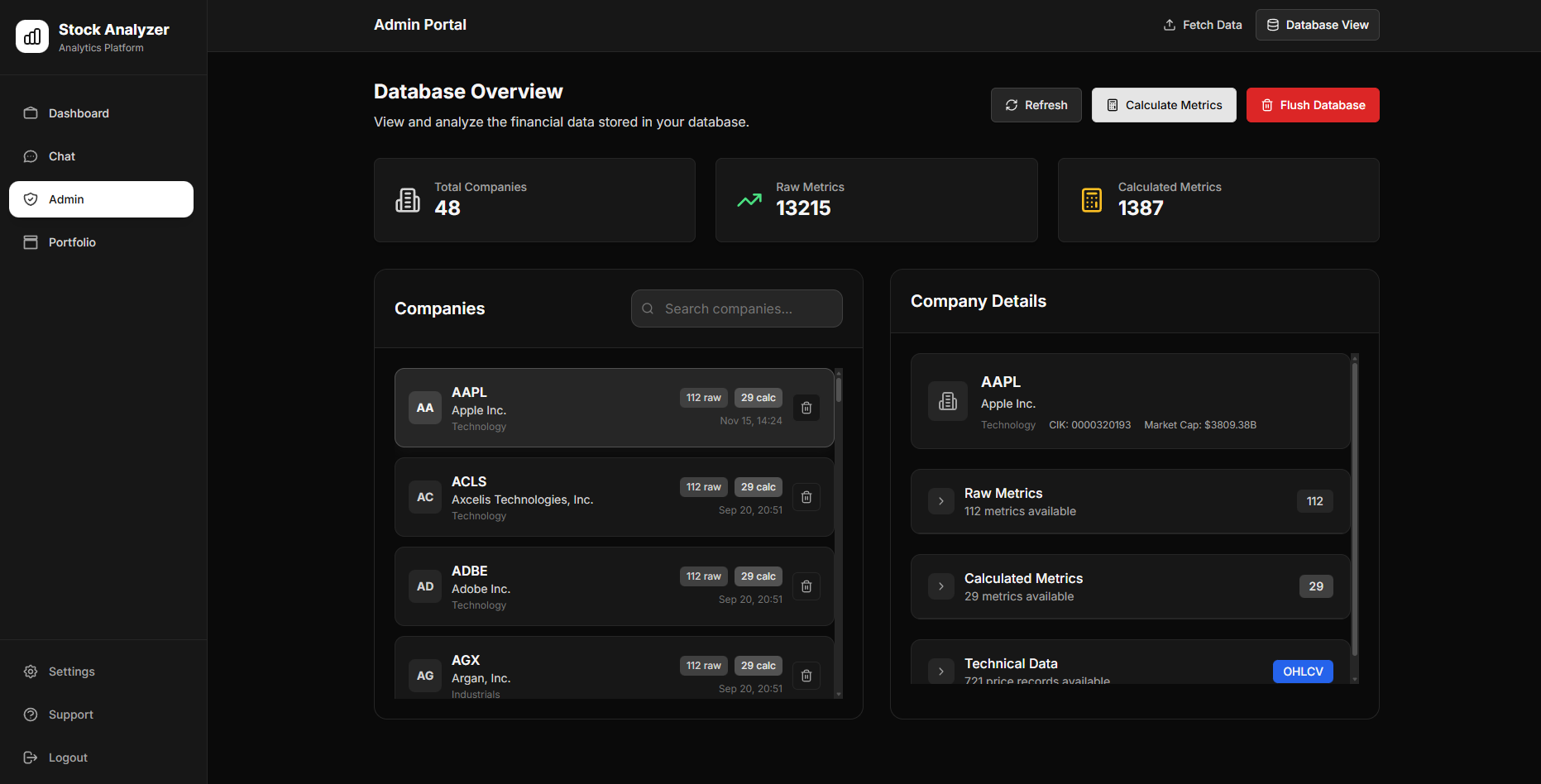

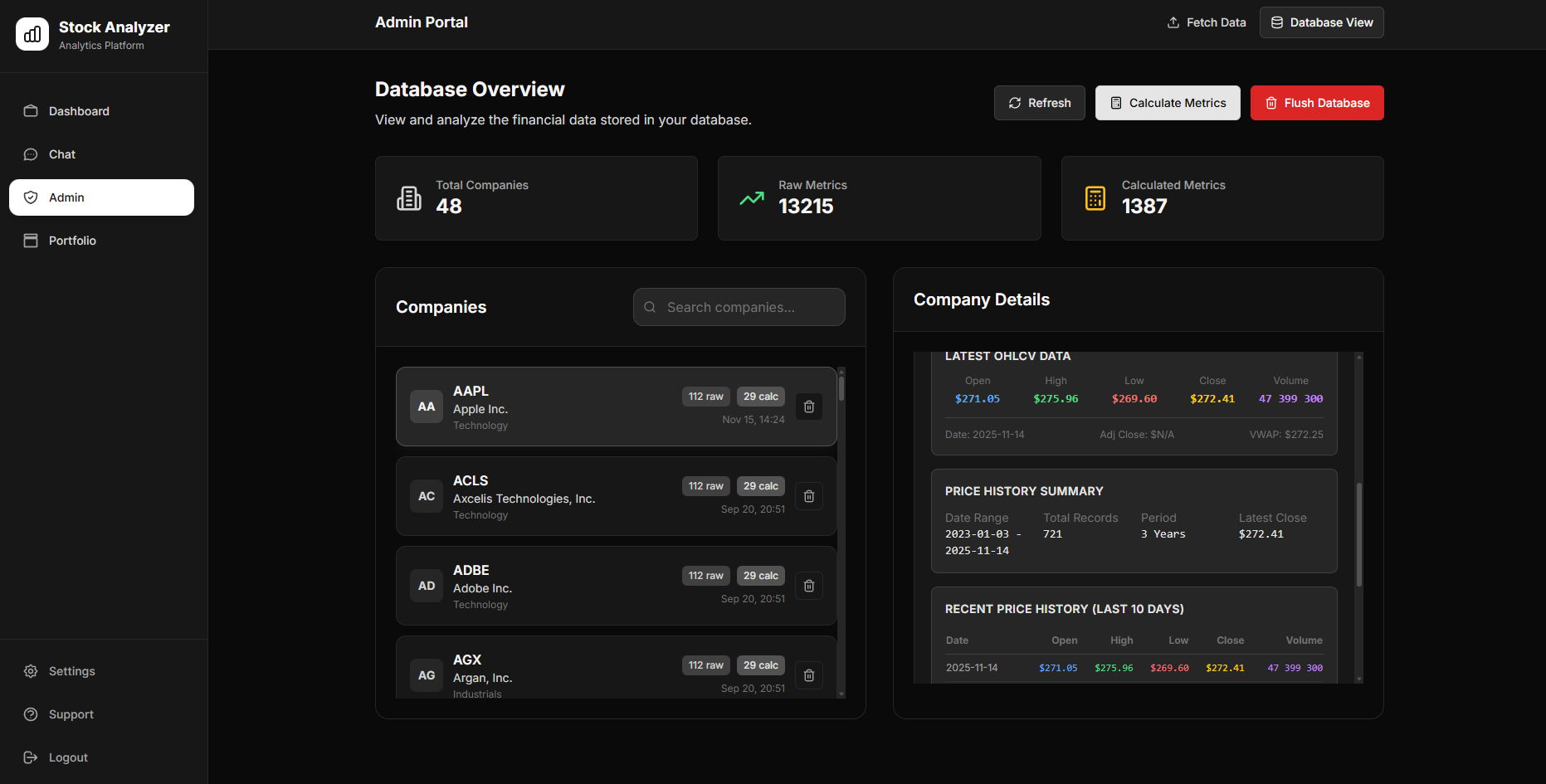

Conversation state is managed with a token-aware memory system combining recent message windows, rolling summaries, and pinned references, allowing long-running analytical discussions without context loss. Results are streamed back to the user as analyses complete, with downloadable Excel reports and interactive charts. Additionally the dashboard page shows an overview of portfolio performance, watch/buylists, ongoing analyses, and notes, while the portfolio page tracks holdings and historical results. The admin interface provides manual data fetching, configuration and monitoring, completing a unified platform that combines chat-driven analysis with structured oversight.

Tech & Implementation

Backend Stack

- Python (FastAPI) – Core backend framework across all services (data, screener, fundamentals, technical, chat orchestration)

- PostgreSQL – Primary relational database for financial data, metrics, chat history, and system state

- SQLAlchemy 2.0 – ORM for schema modeling, relationships, and async database access

- Alembic – Database migrations, schema evolution, and controlled data seeding

- Pydantic v2 – Request/response validation and environment-based configuration

- OpenAI SDK – LLM integration for conversational analysis, tool orchestration, and streaming responses

- Tavily API – Web search integration for real-time external context

- Financial Modeling Prep API – Primary financial data provider

- Pandas & NumPy – Financial data processing and technical indicator calculations

- Plotly – Interactive chart generation (standalone HTML outputs)

- openpyxl – Multi-sheet Excel report generation with formulas and formatting

- passlib – Password hashing using bcrypt, configured with a

CryptContextand appropriate bcrypt salt rounds - python-jose – JWT token encoding/decoding

- httpx – Async HTTP client for inter-service communication and external API calls.

- tiktoken – Token counting library for LLM context management.

Frontend Stack

- React – UI framework for dashboard, chat, portfolio, and admin interfaces

- Vite – Build tool and dev server with optimized production output

- Tailwind CSS – Utility-first styling with dark mode support

- React Router – Client-side routing and protected views

- Axios – Centralized API communication layer

- Streaming via Fetch API – Incremental rendering of chat responses using streamed HTTP responses

- react-markdown – Rich Markdown rendering for chat output

- Recharts – Portfolio and dashboard visualizations

- Lucide React – Icon system for consistent UI

- Additional UI Utilities – React Query (admin), date-fns, dropzone, and class utilities where applicable

Data & Database Design

- Normalized PostgreSQL schema with clearly defined service boundaries

- UUID primary keys for distributed-system compatibility

- JSONB columns for flexible metric values, calculation inputs, and logs

- Composite indexes for high-frequency query paths

- Foreign keys with cascade deletes to enforce integrity

- Database views for simplified access:

- `latest_raw_metrics`

- `latest_calculated_metrics`

- `company_metrics_summary`

- Pattern-based search for conversation history

- Historical data tracking for metrics and price series, including latest vs. historical state

Development & Infrastructure

- IDE – Cursor and VSCode with GitHub Copilot

- Docker – Containerization of all backend and frontend services

- Docker Compose – Local and production orchestration

- Redis – Caching and shared state for chat and data services

- Nginx – Reverse proxy and static asset serving

- Health Checks – Standardized `/health` endpoints across all services

- Logging – Consistent application-level logging

- Configuration Mgmt – Centralized environment-based configuration

- Local Server – System is deployed on my local server

What Stands Out

What differentiates the platform is its end-to-end design. Screening, fundamental valuation, and technical analysis aren’t siloed tools but a single, coherent pipeline. Screening adapts to company lifecycle and sector, avoiding generic thresholds and producing context-aware results.

Valuation stays grounded in my proprietary models and logic. The DCF engine uses standard WACC-based, multi-scenario analysis (bear, base, bull) with explicit margin-of-safety calculations. Results are interactive or exportable as a multi-tab Excel report with formulas, structure, and formatting suitable for additional number crunching. Analysis outputs are actionable, delivering concrete entries or acceptable buy prices, exits, sizing, timing and additional noteworthy insights.

The chat layer acts as an orchestration engine, translating natural language into multi-step analytical workflows. Pinned context and rolling summaries support deep, long-running discussions without losing continuity.

Live web search adds real-time market context, while streaming results keep long tasks responsive. A clean microservices architecture with full audit logging ensures scalability and traceability. Beyond chat, users can explore company data, manage portfolios, and track performance, watch/buylists, and notes – tying deep analysis and day-to-day portfolio oversight into a single workflow.

What I Learned

This project pushed me deep into system and architecture design. I learned how to define clear microservice boundaries, coordinate distributed workflows, manage inter-service communication, and share infrastructure without creating tight coupling.

On the financial side, I translated my proprietary Excel-based valuation models into deterministic, precise, and testable code.

Integrating LLMs taught me a lot on how to structure prompts, implement tool-based workflows, manage conversation context, and support streaming responses at scale. Designing rolling summaries and token-aware context compression was key to keeping long conversations coherent.

From an infrastructure perspective, this became my own masterclass in containerization, service orchestration, health monitoring, logging, and environment-based configuration, alongside implementing secure authentication flows, token management, and password handling.

Finally, I learned how to use AI effectively as a pair programmer. Broad, vague instructions and “vibe coding” quickly produced broken, low-quality code. What worked was starting with the overall architecture using Cursor’s Plan mode, outlining services – beginning with the data service – then progressively breaking each part down. Only after that did I use AI to generate code for narrowly defined components, reviewing, editing, and iterating piece by piece.

Resources

FastAPI: https://fastapi.tiangolo.com/

SQLAlchemy 2.0: https://docs.sqlalchemy.org/en/20/

PostgreSQL: https://www.postgresql.org/docs/

React: https://react.dev/

Docker: https://docs.docker.com/

OpenAI API: https://platform.openai.com/docs

Financial Modeling Prep API: https://site.financialmodelingprep.com/developer/docs/

Tavily Search API: https://docs.tavily.com/